Efficient Event Filtering and Runtime Enforcement Strategies

Kubernetes has become the standard for orchestration. However, it raises the concern for organizations to secure their production environment as it doesn’t offer default observability for security to examine the attacks. But eBPF solves that issue.

eBPF is one of the most trendy topics in the industry people are talking about. It’s not getting attracted only by developers working with Linux Kernel but also by the cloud-native community.

In this blog, we will be discussing Tetragon, an open-source project of Cilium, which provides kernel-level transparency, deep observability, and security at run-time. But before that, let’s understand a bit about eBPF.

What is eBPF?

eBPF(Extended Berkeley Packet Filter) is a technology that allows extending the capabilities/functionalities of the linux kernel at runtime i.e. without changing kernel source code or rebooting it. It has been observed that what Javascript does to HTML, eBPF does to linux.

Using eBPF, We can program the Linux Kernel dynamically and get the insights. In general, eBPF works in networking, security, and observability.

eBPF programs are written in simple C-like language and they are compiled into bytecode and then loaded into the kernel. The Linux kernel and eBPF are related to each other.

Since eBPF has been a popular technology in use, there are always questions in front of the security teams to answer like ‘Who is watching eBPF?’ , ‘What eBPF programs were loaded and who loaded them?’ and so on. Basically to track and audit the eBPF programs.

These questions can be answered by Tetragon, an eBPF-based security observability and runtime enforcement tool. Before going deep into Tetragon, Let’s explore the terms security observability and runtime enforcement.

What is Security Observability and Runtime Enforcement?

Security Observability

Cloud-native environments are often dynamic and distributed; one should know what is happening inside to secure it. To prevent attacks in advance or run-time, one needs to observe the system and application, report them if any unwanted activities occur, and take required actions.

Runtime Enforcement

To prevent threats and impose security policies in real-time environments while maintaining the operational agility of it. This can be achieved by hooking into the kernel and system calls. In simple words, to stop malicious events by detecting them before they can cause any damage.

Let’s understand now what Tetragon is and how it works.

What is Tetragon?

Tetragon is an eBPF-based security observability and real-time runtime enforcement tool developed by Cilium from Isovalent. It provides deep visibility into the system without any application changes. It helps in detecting security-related events such as:

- Process execution events

- System call activity

- I/O activities such as network and file activities

Tetragon can run on any Linux distribution machine. Also, it is not mandatory to deploy Cilium to run Tetragon. Tetragon is also Kubernetes-aware; it understands namespaces, pods, and other resources and can detect security-related events for them.

Architecture of Tetragon

The below diagram shows the architecture of Tetragon. As Tetragon is a security observability and runtime enforcement tool, it can hook the linux kernel such that it can track kernel runtime, system calls, TCP/IP stack , process execution, filesystems, monitor cgroups, and namespaces. Other than this, it can monitor what’s happening inside the pods running in the cluster which uses these kernel-level data.

But, it sounds like a lot of overhead as many activities are logged and monitored by Tetragon with eBPF. For this Tetragon comes with the solution of Smart Collector. It helps in filtering and aggregating the required information in the kernel and sends it to the Tetragon agent running in the user space. Tetragon also provides integration with Prometheus, Grafana and other tools for better analysis.

To understand how we achieve these, we must explore how the Tetragon functions in the kernel via eBPF.

Tetragon is Flexible, It hooks into any Linux kernel function and filters on its arguments, return value, associated process metadata, files, and other attributes. Several security and observability use cases can be resolved by users as they can customise tracing policies.

Tetragon enables deep kernel hooking that prevents user space applications from manipulating data structures. It helps to avoid issues with syscall tracing where data is incorrectly read, altered, or missing due to page faults, etc.

A set of rules are written in security policies, which can be injected into the Tetragon agent through Kubernetes (CRDs), a JSON API, or with policy engines like Open Policy Agent(OPA), which enforce these rules into the kernel. These policies are stored in the Rule Engine within the Kernel. The eBPF Kernel Runtime ensures that policies are followed and if an application violates it, action can be stopped or the application can be terminated. These security policies are known as Tracing Policies.

TracingPolicy

TracingPolicy is a user-configurable Kubernetes custom resource that describes what Tetragon will observe along with actions and enforcement to apply. Tetragon reads this file and transforms it into an eBPF program. This will be loaded in the kernel. This policy file extends and observes exactly what we want inside the kernel. Currently, kprobes and tracepoints events are supported in the TracingPolicy object.

The TracingPolicy configuration file helps us in two different aspects:

- Filter the event logs

- Security enforcement

Filter the Event Logs

TracingPolicy is a custom resource object applied with Tetragon to filter events as per the user’s requirement. For example doing file monitoring, network monitoring, etc.

Run-time Security Enforcement

TracingPolicy objects are used for security enforcement at run-time. For example, restrict current system information (uname -a) from the running pod, restrict the creation of symbolic links inside a running pod, generate alerts or kill the process if it changes the privileges, etc.

Following is the example of TracingPolicy. This is used to view TCP connection events through Tetragon.

#tcp-connect.yaml

apiVersion: cilium.io/v1alpha1

kind: TracingPolicy

metadata:

name: "connect"

spec:

kprobes:

- call: "tcp_connect"

syscall: false

args:

- index: 0

type: "sock"

- call: "tcp_close"

syscall: false

args:

- index: 0

type: "sock"

- call: "tcp_sendmsg"

syscall: false

args:

- index: 0

type: "sock"

- index: 2

type: intIn the spec field,

- We instruct the tetragon to hook with only

TCP_connect,TCP_sendmsg, andTCP_closekprobes. - By default, syscall is set to

falsein all. This field indicates Tetragon will hook with a regular kernel function. - Tetragon maps the list of function arguments to be included in the trace output.To properly get hooked onto the kernel function, the BPF code needs information about what type of argument is going to be and what place so that it can be collected and printed in trace output. Above the first argument of type

sockhas an index0for all kprobes written in this policy.

Tetragon extracts information according to the rules defined in the policy. Users can also create and apply custom policies for run-time enforcement as per their needs.

tetra-CLI

tetra-CLI is a full-featured tool available from Tetragon to analyse and visualise logs to make it more accessible and simple to understand. It is used to interact with tetragon servers and filter events by pod, host, process, namespace etc.

Lab with Tetragon

In this lab, we will perform the following tasks:

- How to Deploy and Manage Tetragon?

- Install tetra CLI.

- Checking Logs with Tetragon.

- How to filter Application logs?

- How to apply security enforcement at run-time?

How to Deploy and Manage Tetragon?

There are multiple ways to deploy a tetragon. One can deploy it with the help of a binary package, as a docker container and pods in the Kubernetes cluster. We will be seeing about setting it up in Kubernetes.

Click on Lab Setup button and explore how Tetragon works.

- Install

Tetragon as a daemonsetin the Kubernetes environment using Helm.

helm repo add cilium https://helm.cilium.io helm repo update

helm install tetragon cilium/tetragon -n kube-system

- After installation, get the tetragon daemon set to get the more information.

kubectl get ds tetragon -n kube-system

You will observe that there is one init-container : tetragon-operator and two containers : export-stdout and tetragon.

Note: By default, tetragon lists kube-system namespace events only.

Next, try to extract event logs.

Observe the Logs with Tetragon

- To observe the

raw JSON events, open another terminal and execute the following command.

kubectl logs -n kube-system -l app.kubernetes.io/name=tetragon -c export-stdout -f

- To get the events in

better format, installtetra clifor this.

curl -L https://github.com/cilium/tetragon/releases/latest/download/tetra-linux-amd64.tar.gz | tar -xz

sudo mv tetra /usr/local/bin

- Observe the events with

tetracommand

kubectl logs -n kube-system -l app.kubernetes.io/name=tetragon -c export-stdout -f | tetra getevents -o compact

OR

One can also get the event logs from the Tetragon container itself which has tetra cli in it.

kubectl exec -it -n kube-system ds/tetragon -c tetragon -- tetra getevents -o compact

We can get all event logs from the kube-system namespace. Let’s try to deploy a demo application and retrieve logs from the application through Tetragon.

How to Filter Application Logs?

We will install a demo application and try to extract events (read/write access) from the running application pods.

Install RSVP Application

RSVP is a response to the confirmation of an invitation. This Application will provide a total response for the host to organise well.

- Install the

RSVP applicationusing yaml manifests attached in a Lab environment.

kubectl apply -f pv.yaml -f pvclaim.yaml

kubectl apply -f frontend.yaml,backend.yaml

kubectl get all -n default

Please click on app-port-30001 to view our application.

To view the events of the application we would apply TracingPolicy.

Apply TracingPolicy to filter read/write access

- Apply the TracingPolicy,

file-monitoring.yamlwhich monitors and filters read/write access on/dataas this is the location where db data is getting mounted.

cat file-monitoring.yaml

#file-monitoring.yaml

apiVersion: cilium.io/v1alpha1

kind: TracingPolicy

metadata:

name: "file-monitoring"

spec:

kprobes:

- call: "security_file_permission"

syscall: false

return: true

args:

- index: 0

type: "file" # (struct file *) used for getting the path

- index: 1

type: "int" # 0x04 is MAY_READ, 0x02 is MAY_WRITE

returnArg:

index: 0

type: "int"

returnArgAction: "Post"

selectors:

- matchArgs:

- index: 0

operator: "Prefix"

values:

- "/data/" # the files that we care

- call: "security_mmap_file"

syscall: false

return: true

args:

- index: 0

type: "file" # (struct file *) used for getting the path

- index: 1

type: "uint32" # the prot flags PROT_READ(0x01), PROT_WRITE(0x02), PROT_EXEC(0x04)

- index: 2

type: "nop" # the mmap flags (i.e. MAP_SHARED, ...)

returnArg:

index: 0

type: "int"

returnArgAction: "Post"

selectors:

- matchArgs:

- index: 0

operator: "Prefix"

values:

- "/data/" # the files that we care

- call: "security_path_truncate"

syscall: false

return: true

args:

- index: 0

type: "path" # (struct path *) used for getting the path

returnArg:

index: 0

type: "int"

returnArgAction: "Post"

selectors:

- matchArgs:

- index: 0

operator: "Prefix"

values:

- "/data/" # the files that we carekubectl apply -f file-monitoring.yaml

kubectl get tracingpolicy

- To list the pods

kubectl get pods

In our case, We will list the event logs of a backend pod as it would store the data from the application’s frontend.

- To get logs of a particular pod

kubectl logs -n kube-system -l app.kubernetes.io/name=tetragon -c export-stdout -f | tetra getevents -o compact --namespaces default --pods <pod-name>

Here, our pod is running under the default namespace. Please mention the namespace with tetra CLI. Replace <pod-name> with rsvp-db backend pod name.

Now, add some data in the application’s front-end and see the events.

- To remove the TracingPolicy applied on the Tetragon

kubectl delete -f file-monitoring.yaml

- Also, We can achieve network observability by applying the

tcp-connect.yamlmanifest mentioned above.

cat tcp-connect.yaml

kubectl apply -f tcp-connect.yaml

kubectl get tracingpolicy

Now, again check the logs of the DB pod, you will see it has established a TCP connection with the frontend pod.

Verify this by checking the IP addresses of both pods.

kubectl get pods -o wide

- Delete the above TracingPolicy.

kubectl delete -f tcp-connect.yaml

Next, we will explore how to achieve security enforcement by appying TracingPolicy at run-time.

How to Apply Security Enforcement at Run-Time?

We will create a symlink under the running pod. Then we will try to restrict symlink creation in that pod with the help of the tracing policy.

Symbolic Link Creation in Running Pod

The symbolic link is an interface between user space and kernel space. First, we will create a pod using the Ubuntu image and create a symlink of the /etc/passwdfile within a running pod.

- Keep watching the Tetragon event logs in one terminal and create and run a pod in another terminal.

kubectl run cloudyuga --image=ubuntu sleep infinity

kubectl get pods

- To create a process in running pod

kubectl exec -it cloudyuga -n default -- bash

- Now, create a

symbolic linkin the running pod,

ln -s /etc/passwd symlink-cy

- Verify symlink

ls -l

- You will find the symlink creation in tetragon events in the other terminal.

kubectl logs -n kube-system -l app.kubernetes.io/name=tetragon -c export-stdout -f | tetra getevents -o compact

- Remove this link and exit from the pod.

rm symlink-cy

Restrict symlink creation

- Now, we will apply TracingPolicy to enforce restrictions on symbolic link creation. TracingPolicy file

restrict-symlink.yamlis attached in Lab-Setup.

cat restrict-symlink.yaml

#restrict-symlink.yaml

apiVersion: cilium.io/v1alpha1

kind: TracingPolicy

metadata:

name: "sys-symlink-passwd"

spec:

kprobes:

- call: "sys_symlinkat"

syscall: true

args:

- index: 0

type: "string"

- index: 1

type: "int"

- index: 2

type: "string"

selectors:

- matchArgs:

- index: 0

operator: "Equal"

values:

- "/etc/passwd\0"

matchActions:

- action: Override

argError: -1kubectl apply -f restrict-symlink.yaml

kubectl get tracingpolicy

- After applying the policy, exec in the pod to create a new process

kubectl exec -it cloudyuga -- bash

- Try to create a symbolic link again.

ln -s /etc/passwd symlink-new

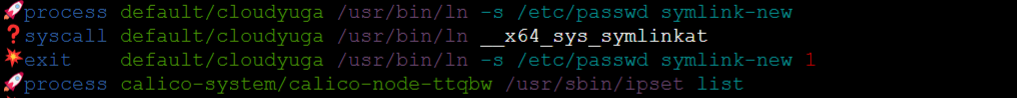

You will observe that the user will not be able to create a symbolic link in the pod due to the tracing policy in action.

- Observe the Tetragon events

Likewise, we can apply other filters and security enforcement by creating our own TracingPolicy and hook up them with different kernel functions as per requirements. Please refer to these TracingPolicy examples to explore more.

Advantages of tetragon

As a robust, open source, observability tool, tetragon comes up with some advantages such as:

- It provides a powerful observability layer.

- It enables security enforcement at run-time

- Get insights without modifying source code.

- Observability data are collected transparently from the kernel.

- It imposed low overhead on the system.

- It is compatible with other observability tools like Fluentd, Prometheus, OpenTelemetry, etc.

Limitations of tetragon

- Tetragon policies are

low-levelwhich requires kernel-level and container-level knowledge.

Conclusion

In this blog, we introduced Tetragon, an open-source tool that manipulates the kernel to obtain smart and deep observability and run-time security enforcement without changing the source code. We also elaborated on how our application-specific event data can be extracted & how to restrict symbolic link creation within the pod.

References

[1] https://ebpf.io/what-is-ebpf/#verification

[2] https://tetragon.cilium.io/docs/getting-started/explore-security-observability-events/