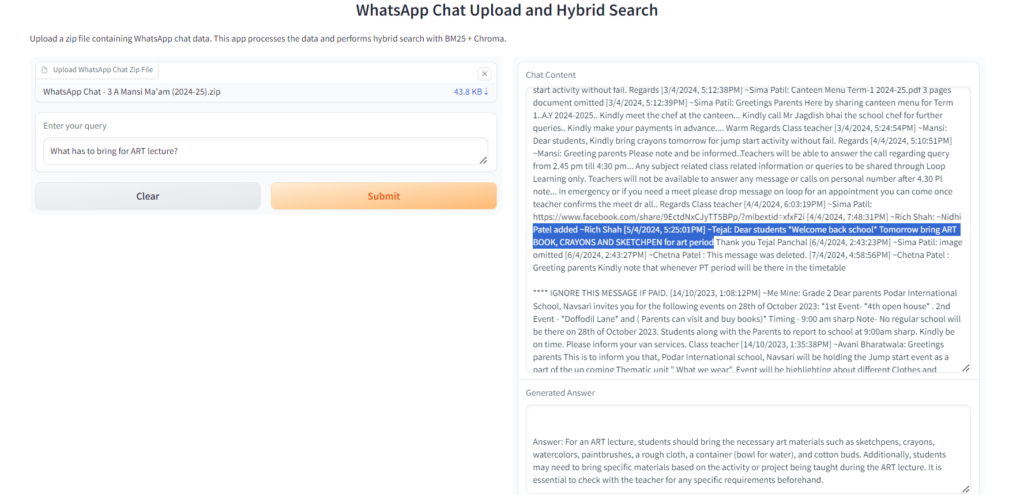

Description

This is a Gradio-based application that enables users to upload a zip file containing WhatsApp chat data and perform a hybrid search using both BM25 (sparse retrieval) and Chroma (dense retrieval). The app processes chat messages, indexes them, and allows users to query the chat data. After uploading the chat data and entering a query, the app returns search results from both BM25 and Chroma. It also generates an expert-level answer using a language model based on the results of the search.

The application is ideal for analyzing WhatsApp chats, retrieving relevant information, and generating insights or answers in response to specific user queries.

Model Inference

The application utilizes the mistralai/Mistral-7B-Instruct-v0.3 model from Hugging Face.

Tools Used

- Gradio: A Python library used to build and deploy machine learning applications with a user-friendly web interface.

- Zipfile: To extract and process zip files containing WhatsApp chat data.

- Chroma: Used as a vector store for dense embeddings. It allows for similarity searches based on the chat content using embeddings.

- BM25: A ranking function used for sparse vector-based retrieval, enabling text searches based on token matching.

- HuggingFace Embeddings: Hugging Face models are used to generate embeddings for dense search retrieval, making the search process more semantically rich.

- LangChain: This library simplifies the handling of different language model tasks, document processing, and chunking for text-based tasks.

- NLTK: For tokenizing the text data during the chat processing.

- dotenv: To load environment variables like API tokens from

.envfiles securely.

Try It Out!

Create message dump

To export your WhatsApp conversation(s), complete the following steps:

- Open the target conversation

- Click the three dots in the top right corner and select “More”.

- Then select “Export chat” and choose “Without media”.

- Save .zip file and extract all to get

<file_name_chat>.txtfile. Use this file in the following app.

Preview: