A low-risk approach for Developers and Administrators

Linux provides the sysctl mechanism to modify the kernel behavior at runtime. There are many situations where this is desired. For example, tuning the kernel to run high-performance computing apps, configuring the application core dump settings, modifying network settings, experiments, etc.

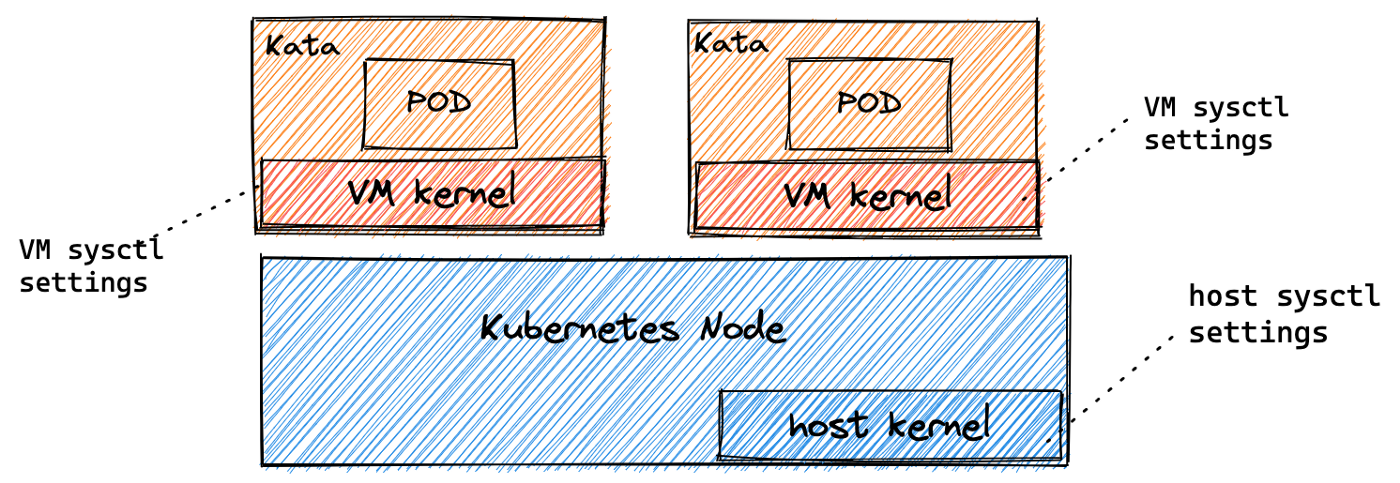

Sysctl settings can be grouped into two categories:

- Namespaced: these can be set per POD in a Kubernetes cluster.

- System-wide: these are global and affect the entire Kubernetes node.

Further Kubernetes classifies namespaced sysctls as safe and unsafe. Safe sysctls are properly isolated and have no impact on other PODs running on the system. For detailed information please refer to the Kubernetes sysctl docs.

As you can infer, the setting of system-wide or unsafe sysctls will not be allowed as a general-purpose mechanism in a cluster.

So if you have a need for using these sysctls then what are your options today?

- Allocate a specific node or set of nodes.

- Use a VM-based container runtime allowing a separate kernel for each POD.

Allocating a specific node or set of nodes is a practical solution for specific classes of workload requiring the same settings. However, if there are different classes of workloads requiring different kernel settings then a better alternative is using VM-based container runtime.

Please remember there is no one size fits all approach. We have seen cases where both options are in use.

In this hands-on lab let’s see a few examples of how Kata containers, which is a VM-based container runtime can help meet your objectives for per POD kernel settings in a safe and low-risk manner.

Each Kata POD is using a separate kernel, so any sysctl setting only affects the specific POD. You can use a privileged InitContainer to modify the kernel settings.

Lab for Configuring Per Pod Kernel Settings

Prerequisites

- A Kubernetes Cluster bootstrapped and installed with kubeadm, kubectl and kubelet

- Container Runtime Interface (CRI) – Containerd or cri-o

Installation of Kata-Containers

The easiest way to deploy Kata containers in a Kubernetes cluster is via kata-deploy. This will run as a pod inside the kube-system namespace and will install all the binaries and artifacts needed to run Kata containers.

- Create and provision different RBAC roles to kata-deploy pod

kubectl apply -f https://raw.githubusercontent.com/kata-containers/kata-containers/main/tools/packaging/kata-deploy/kata-rbac/base/kata-rbac.yaml

- Then create a kata-deploy pod by deploying its stable version.

kubectl apply -f https://raw.githubusercontent.com/kata-containers/kata-containers/main/tools/packaging/kata-deploy/kata-deploy/base/kata-deploy-stable.yaml

- Check the kata-deploy pod status inside the

kube-systemnamespace.

kubectl get pods -n kube-system

kubectl -n kube-system wait --timeout=10m --for=condition=Ready -l name=kata-deploy pod

- Check the Kata-Containers labels on the node

kubectl get nodes --show-labels | grep kata

- After this configure a runtime class for Kata Containers by creating a Kubernetes resource

kind:RuntimeClass.

# runtimeclass.yaml

kind: RuntimeClass

apiVersion: node.k8s.io/v1

metadata:

name: kata-qemu

handler: kata-qemu

overhead:

podFixed:

memory: "160Mi"

cpu: "250m"

scheduling:

nodeSelector:

katacontainers.io/kata-runtime: "true"kubectl apply -f runtimeclass.yaml

Currently, we are creating a runtime class named kata-qemu (line 6) and this will be used to create pod running inside a VM. There are other runtime classes that can also be used according to the platforms like kata-clh is used with cloud hypervisor, kata-fc is used with firecracker.

In runtime class, pod overhead (line 7) has been defined which has memory and CPU overheads set for any pod using the specific runtime class.

- See more information about the

kata-qemuruntime class through

kubectl get runtimeclass

kubectl describe runtimeclass kata-qemu

Using InitContainer to set a specific sysctl

An advantage of using the privileged initContainer approach is that you don’t need to provide privileged access to the application container.

- Create a pod with init container and application container

# sysctl-initcont.yaml

apiVersion: v1

kind: Pod

metadata:

name: test-sysctl

spec:

runtimeClassName: kata-qemu

containers:

- name: test-sysctl

image: quay.io/fedora/fedora:35

command:

- sleep

- "infinity"

initContainers:

- name: init-sys

securityContext:

privileged: true

image: quay.io/fedora/fedora:35

command: ['sh', '-c', 'echo "65536" > /proc/sys/kernel/msgmax']kubectl apply -f sysctl-initcont.yaml

kubectl get pods

As shown below, resetting msgmax fails from the application container since it’s not privileged.

- Interact with the application container and try to set the value of

msgmax

kubectl exec -it test-sysctl -- bash

cat /proc/sys/kernel/msgmax

echo 4096 > /proc/sys/kernel/msgmax

Using InitContainer to set core-dump pattern

- Create a pod with init container and application container and run

sysctlcommand in init-container

# coredump-initcont.yaml

apiVersion: v1

kind: Pod

metadata:

name: test-coredump

spec:

runtimeClassName: kata-qemu

initContainers:

- name: init-coredump

image: busybox:1.28

command: ['sh', '-c', "sysctl -w kernel.core_pattern=core.%P.%u.%g.%s.%t.%c.%h.%e"]

securityContext:

privileged: true

containers:

- name: test-coredump

image: quay.io/fedora/fedora:35kubectl apply -f coredump-initcont.yaml

kubectl get pods

Conclusion

In this blog, we have learnt how to set per pod kernel settings with Kata containers.