In the 3rd session of “Machine Learning on Kubernetes” Book Club session we discussed how we can take a machine learning model and containerize it. You can find more details about the series and previous two recording here.

We started the session by doing the quick review of Machine Learning and then looked a basic flask application to expose certain endpoints.

from flask import Flask, request

app = Flask(__name__)

@app.route('/', methods=['POST'])

def add():

a = request.form["a"]

b = request.form["b"]

return str( int(a) + int(b))

if __name__=='__main__':

app.run(port="8080")We then built a basic linear regression model and packaged it with pickle module of Python.

import numpy as np

import pandas as pd

import pickle

from sklearn.model_selection import train_test_split

from sklearn import linear_model

from sklearn.linear_model import LinearRegression

import pickle

dataset = pd.read_csv('house_price.csv')

X = dataset.loc[:, ['size']].values

y = dataset.loc[:, ['price']].values

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.4, random_state=42)

lr = LinearRegression()

lr.fit(X_train, y_train)

y_pred = lr.predict(X_test)

print ("Pridict from the test set")

print(y_pred)

with open('lr.pkl', 'wb') as model_pkl:

pickle.dump(lr, model_pkl)

model = pickle.load( open('lr.pkl','rb'))

print ("One sample prediction from the loaded model ")

print(model.predict([[2200]]))Once our model is packaged we used another flask program to expose it via a web endpoint.

import numpy as np

import pickle

from flask import Flask, request

model = pickle.load( open('lr.pkl','rb'))

app = Flask(__name__)

@app.route('/predict', methods=['POST'])

def predict_size():

size = int(request.form["s"])

print (size)

prediction = model.predict([[size]])

return str(prediction)

if __name__=='__main__':

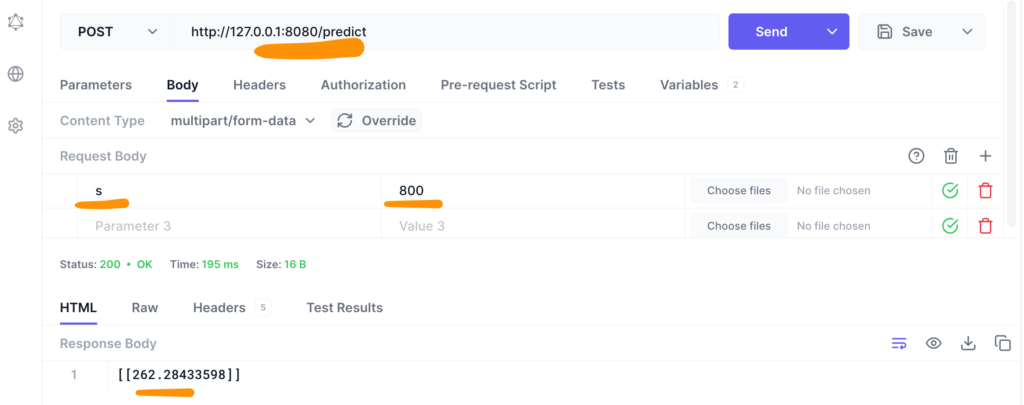

app.run(host="0.0.0.0", port="8080")After running the program we could access the model /predict endpoint.

Once this is we just had to put all above Python along with csv file to get our model served by the Docker Container.

FROM continuumio/anaconda3

RUN mkdir /app

COPY . /app

EXPOSE 8080

WORKDIR /app

RUN pip install -r requirements.txt

CMD python house_price_predict_api.pyand once the image is built, we can simply run the container and serve our model through it.

docker container run -p 8080:8080 <image_name>Please go over the recording to follow the steps as we did during the session. In the next session we’ll be exploring how can use mlfow to track different experiments.